The emergence of AI Overviews and conversational assistants marks a fundamental shift in how information is distributed. Instead of presenting a list of links, these systems synthesize information from multiple sources to provide a single, direct answer. This process introduces a significant risk: brand semantic drift. LLMs don’t “understand” your brand; they generate probabilistic responses based on the data they were trained on, which can include outdated press releases, incorrect third-party articles, or misinterpreted product pages.

This synthesis can produce plausible-but-wrong statements about your company’s services, security protocols, or market position. The result is a slow, silent erosion of trust with customers, partners, and regulators. Controlling your AI search brand reputation is not optional; it is a core function of modern brand governance.

The Inevitable Risk: How AI Brand Drift Happens

An LLM’s primary function is to predict the next most likely word, not to verify factual accuracy. When it synthesizes information about your brand, it blends language from your official website with data from across the web. If a respected industry blog misunderstood a key feature, or a news article contains an old pricing model, the AI may weave that inaccuracy into its answer.

Because the output is fluent and confident, users accept it. This creates a dangerous feedback loop where the incorrect AI-generated text becomes a source for future answers, cementing the error. For companies in regulated industries like finance or healthcare, this presents a direct compliance threat. For any organization, it represents an uncontrolled dilution of carefully crafted messaging.

A Framework for Control: The Four Layers of LLM Brand Governance

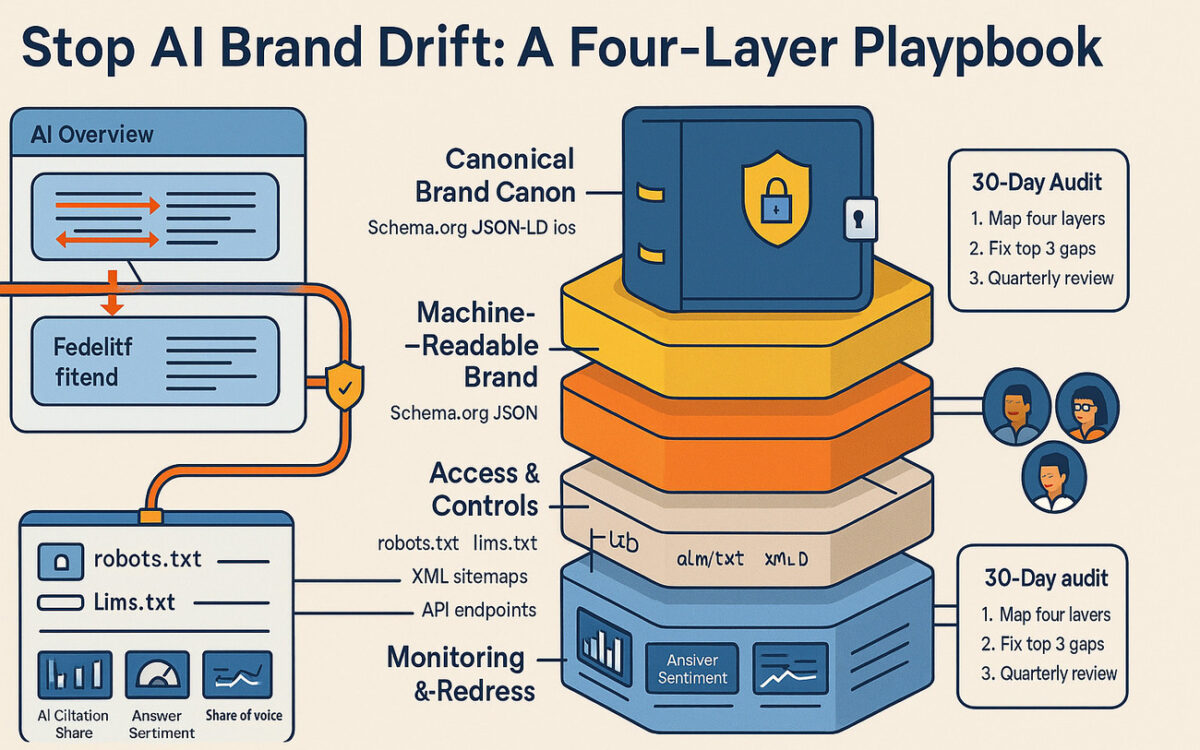

To combat AI brand drift, you need to manage how LLMs learn about and talk about you. A proactive strategy for LLM brand governance involves establishing control across four distinct layers. This approach treats your brand not just as creative content, but as structured data that machines can reliably interpret.

- The Canonical Brand Canon: This is your non-negotiable, single source of truth. It is an internal repository that defines core brand identity components: approved positioning statements, official terminology, key feature descriptions, executive bios, and documented claims. This canon becomes the foundational source material for all public-facing content.

- The Machine-Readable Brand: This layer translates your brand canon into formats that AI crawlers can easily parse and prioritize. It includes technical SEO elements that form the basis of Generative Engine Optimization. Key components are a well-defined entity home (your official homepage and About Us page), clean Schema.org markup for products and FAQs, dedicated policy documents, and structured data feeds.

- Access & Controls: Here, you explicitly guide AI crawlers. You define what information they can access and how they can use it. This includes configuring your

robots.txtand the emergingllms.txtfiles to set permissions, maintaining clean XML sitemaps for discoverability, and providing official data through APIs or datasets where appropriate. It also means keeping profiles on high-authority platforms that LLMs trust (like Wikipedia or industry directories) accurate and up to date. - Monitoring & Redress: You cannot control what you do not measure. This layer involves actively tracking how AI systems cite your brand. It requires setting up monitoring for AI Overviews citations, performing regular quality assurance on answers about your brand, and establishing a workflow for requesting corrections when inaccuracies appear.

Measuring What Matters in the Age of AI Brand Drift

Traditional SEO metrics like keyword rankings are no longer sufficient. While maintaining a top-10 organic presence is necessary—as AI Overviews frequently draw from these results—you must expand your measurement framework. The new key performance indicators for brand visibility focus on the AI-powered answer layer itself.

Your team should track:

- AI Citation Share: What percentage of AI answers for your core topics cite your domain as a source?

- Answer Sentiment: Is the language used in AI summaries about your brand positive, neutral, or negative?

- Message Fidelity: How accurately do AI-generated answers reflect your Canonical Brand Canon?

- Share-of-Voice in Answers: When a user asks a non-branded question in your category, is your brand mentioned in the AI-generated response?

These metrics provide a direct view of your AI search brand reputation and the effectiveness of your governance efforts.

Operations Win: The Cross-Functional Governance Playbook

Technology alone is not the solution. Effective LLM brand governance is an operational discipline that requires collaboration across departments. Silos between SEO, Communications, and Legal are a liability in the generative era.

A successful program unites these teams in a continuous loop.

- SEO & Digital build and maintain the machine-readable brand (Layer 2) and access controls (Layer 3).

- Brand & Comms own the Canonical Brand Canon (Layer 1) and review the output (Layer 4).

- Legal & Compliance provide input on the canon and review high-risk AI answers for liability.

This cross-functional team should establish red-teaming exercises (proactively trying to make an AI say something wrong about the brand) and develop rapid-response playbooks for when brand-damaging inaccuracies are found.

Governance Is Not a Roadblock; It Is the Guardrail for Speed

A common objection is that these controls will slow down content production and innovation. The opposite is true. Without a governance framework, teams operate with fear and uncertainty, manually checking every piece of AI-generated content and reacting to brand fires. This is what truly slows you down.

By creating a trusted ecosystem of canonical sources, structured data, and clear monitoring protocols, you build the guardrails that allow your teams to use AI tools safely and at scale. Governance provides the confidence to move faster, knowing that the foundational brand message is protected. It exchanges reactive panic for proactive control.

Your First 30 Days: The AI Brand Drift Audit

Theory is one thing; action is another. To stop AI brand drift, you must first diagnose your exposure. A focused 30-day audit is the most effective way to start.

Here is your plan:

- Map Your Four Layers: Gather your SEO, Comms, and Legal leads. For each of the four layers, document your current state. Where is your brand canon? Is your schema markup complete? What does your

robots.txtsay? Do you have any AI answer monitoring in place? - Identify and Fix the Top 3 Gaps: Don’t try to fix everything at once. Identify the most glaring weaknesses. For most organizations, these are an incomplete entity home or schema, permissive or non-existent crawler policies (

robots.txt/llms.txt), and zero monitoring of AI answers. Assign owners and fix them. - Institute a Quarterly Review: Schedule a recurring quarterly meeting for this cross-functional team to review your AI citation report. This meeting turns the audit from a one-time project into a continuous governance program, ensuring your brand message remains yours in the age of AI.