The

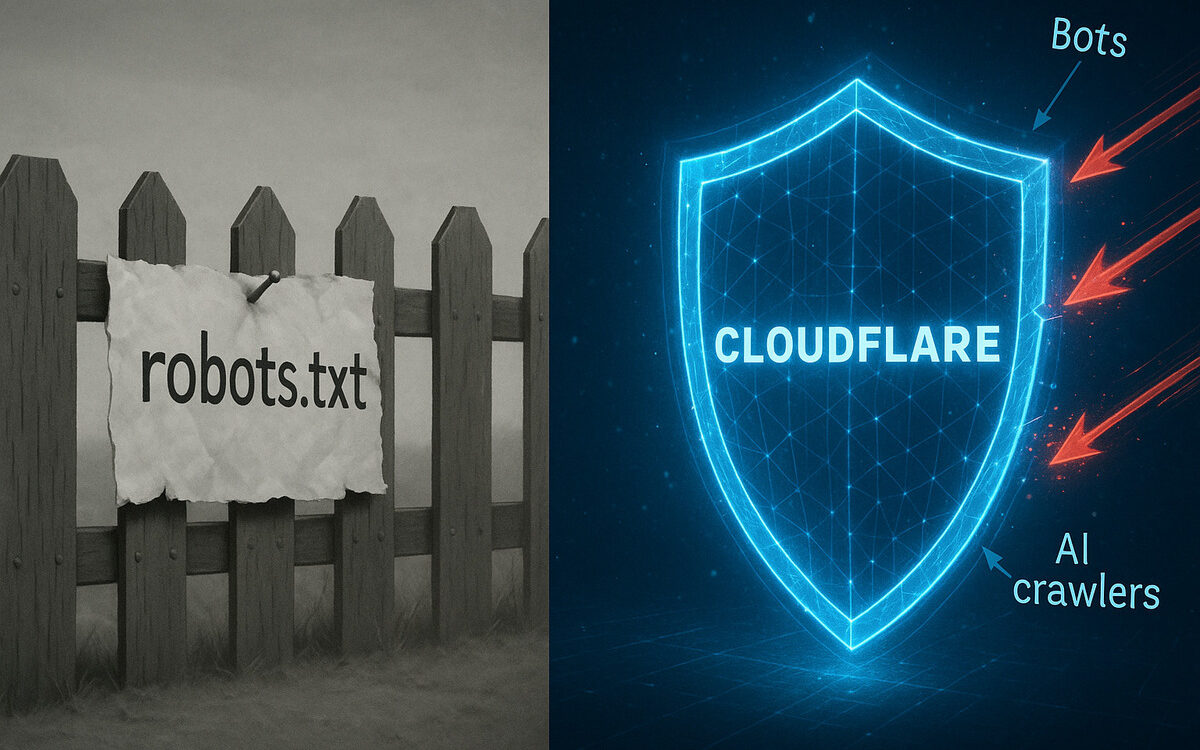

robots.txtfile is an outdated honor system, ineffective against modernAI web crawlersand malicious bots. For genuinecontent scraping protection, website owners must look beyond its severerobots.txt limitations. This article explores theCloudflare vs robots.txtdebate, showing how Cloudflare’s active security and newContent Signalsoffer the control thatrobots.txtonly pretends to provide.

Is Your robots.txt a Paper Tiger? Why You Need Cloudflare in the Age of AI

Have you ever put a “Please Keep Off The Grass” sign on your lawn? It works for people who respect the rules, but it does nothing to stop those who are determined to walk all over it. For decades, website owners have been using a similar sign, a file called robots.txt, hoping to control which bots visit their site. But in the new age of aggressive AI, that polite request is being ignored more than ever.

The debate of Cloudflare vs robots.txt isn’t just a technical discussion for developers; it’s a central issue for anyone who creates content for the web. Your business’s digital assets, your articles, your product descriptions, your unique data—it’s all at risk. Believing robots.txt is enough to protect you is a dangerous misconception. True control requires a real fence, not just a sign.

The Illusion of Control with robots.txt

The robots.txt file is part of the Robots Exclusion Protocol, a standard born in the web’s infancy in 1994. Its purpose is simple: to give instructions to visiting web crawlers, or bots, about which pages they should not access. A typical directive might look like Disallow: /private/, politely asking bots not to index the contents of that folder.

The key word is “politely.” The robots.txt file is not a security mechanism. It is a voluntary directive. Good bots, like Google’s Googlebot or Bing’s Bingbot, generally follow these rules because it’s in their interest to maintain a healthy relationship with webmasters. They want to be invited back.

Malicious bots, content scrapers, and many new AI web crawlers have no such interest. They are not polite guests; they are intruders looking to take what they want. For these bad actors, your robots.txt file isn’t a barrier; it’s a map. By listing the directories you don’t want them to see, you are effectively highlighting where you might keep your most valuable information. It’s like leaving a note on your front door that says, “The spare key is definitely not under the mat.”

This exposes one of the biggest robots.txt limitations: it relies entirely on the cooperation of the bot. There is no enforcement. A bot can simply ignore the file and crawl your entire site, scraping your content, overwhelming your server, and stealing your intellectual property without a second thought. It’s a system built on trust in a digital world where trust is in short supply.

The Rise of AI and the Scraping Epidemic

The internet is currently experiencing a data gold rush, and the miners are artificial intelligence companies. Large language models (LLMs), the engines behind tools like ChatGPT and Google’s AI Overviews, are insatiably hungry for information. To learn, they must read, and their library is the entire open web—including your website.

This has spawned a new generation of sophisticated AI web crawlers designed for one purpose: mass data collection. These bots are built to be fast, efficient, and relentless. They often disguise their identity, mimic human user behavior to avoid detection, and have little to no regard for the guidelines in a robots.txt file. The value of the data they are collecting far outweighs any incentive to follow old web protocols.

Your content is the fuel for their systems. Every blog post, every product review, every unique piece of information your business has painstakingly created is a target. This isn’t just simple plagiarism; your work is being used to build commercial products, often without your consent, credit, or compensation. The scale of this content scraping protection problem is immense and growing daily.

Relying on robots.txt to stop AI scraping is like trying to stop a tidal wave with a picket fence. The protocol was not designed for this kind of aggressive, large-scale data harvesting. The gentlemen’s agreement of the old web is broken, and website owners need a modern solution for a modern threat.

Cloudflare vs robots.txt: A True Digital Bouncer

Where robots.txt offers a polite request, Cloudflare provides a locked door. Instead of just asking bots to behave, Cloudflare’s services actively inspect and filter every single request that comes to your website before it even reaches your hosting server. This is the core difference in the Cloudflare vs robots.txt comparison: one is a passive sign, the other is an active security guard.

Cloudflare positions its global network between your website and the internet, acting as a powerful front line of defense. This allows it to offer several layers of protection that robots.txt cannot.

Here are some of the key features:

- Bot Management: Cloudflare uses machine learning to analyze traffic behavior and identify automated bots. It can distinguish between good bots (like search engines), bad bots (like scrapers and spammers), and human visitors. You can then create rules to block, challenge, or rate-limit suspicious bots, effectively stopping them cold.

- Web Application Firewall (WAF): The WAF protects your site from common web vulnerabilities and malicious activity. It can block requests from known bad IP addresses, stop SQL injection attacks, and prevent other forms of hacking attempts that often accompany aggressive scraping.

- Super Bot Fight Mode: For businesses that need a simple yet powerful solution, this feature automatically identifies and blocks a wide range of proven malicious bots. It provides a level of

Cloudflare securitythat is easy to deploy and immediately effective against a huge portion of automated threats.

These tools do not rely on a bot’s cooperation. They enforce your rules. A scraper that ignores your robots.txt file cannot ignore being blocked at the network edge by Cloudflare. This is real content scraping protection in action.

Content Signals: A New Standard for the AI Era

Cloudflare understands that the battle against unwanted data scraping requires evolving tactics. Blocking every bot is not the answer, as you still want search engines to index your site. The need is for more granular control, especially when it comes to specifying how your content can be used by AI.

This is why they introduced Cloudflare Content Signals. This new policy is a brilliant evolution of the robots.txt standard, designed specifically for the age of AI. It allows publishers to embed machine-readable permissions directly within their robots.txt file, creating a clearer, more enforceable signal about content usage.

The policy adds two main directives:

ai-input: [allow | disallow]: This tells crawlers whether your content can be used as input for AI systems. For instance, if an AI is summarizing a webpage to answer a user’s query, it is using the content as an input.ai-train: [allow | disallow]: This tells crawlers whether your content can be used to train the AI model itself. This is a much broader use case, where your data helps build the foundation of the AI’s knowledge.

By adding these directives, you are no longer just asking bots not to visit a page. You are giving a specific, legally significant instruction about how your copyrighted content may be used. It moves the conversation from “can you crawl this?” to “what are your intentions with my data?” This provides a much stronger foundation from which to defend your intellectual property.

Addressing the Standard Argument

Some might say that robots.txt is a decades-old web standard that reputable companies respect. They may feel that good actors will continue to honor these directives and that adding new rules only complicates things. This view holds that the existing system, while imperfect, is good enough.

That perspective, however, fails to grasp the fundamental shift that AI has brought to the web. Reputable companies have historically respected robots.txt because the primary use of crawling was for search indexing—a mutually beneficial activity. Today, the financial incentive to acquire massive datasets to train proprietary AI models is enormous, creating a powerful conflict of interest.

The old gentlemen’s agreements are straining under this pressure. Not all players in the AI space will adhere to old protocols when billions of dollars are on the line. Cloudflare's Content Signals are not a complication; they are a necessary clarification. They evolve the standard to address a new and pressing threat, creating an explicit signal about data rights that is harder to ignore, both technically and, potentially, in a court of law.

Your Content, Your Rules: The Time for Enforcement is Now

Your website and its content are valuable assets. You wouldn’t leave your physical store unlocked and just hope for the best. It’s time to apply the same logic to your digital property. Asking nicely for your content to be respected is no longer a viable strategy.

The choice in the Cloudflare vs robots.txt debate is clear. One is a relic of a more trusting internet, while the other is a suite of tools built for the realities of today’s digital world. It’s time to move from polite requests to active enforcement.

Don’t just ask nicely for your content to be respected; enforce it. Explore Cloudflare’s bot management and security solutions to take proactive control of your website’s content and protect it from unauthorized scraping and AI training. Take back control and ensure your hard work builds your business, not someone else’s AI model.